Automated driving is increasing rapidly in all of its forms. Currently, vehicles of most manufacturers can be equipped with driver-assistance systems that basically allow the vehicle to drive itself – at least for a short while in ideal conditions.

Smoother traffic by using shared data

Driver-assistance systems typically consist of different sensors that monitor the road environment, such as cameras and laser scanners, as well as radio frequency and ultrasonic proximity sensors. These sensors produce huge amounts of accurate and up-to-date data about the road environment. This data can be shared online to automatically update geospatial data. As a result, each moving vehicle becomes a precision instrument that helps to easily collect geospatial data as a by-product of traffic flows.

Sensor data collected from the road environment can be processed and used in various ways, for example, to define road maintenance activities, to monitor road conditions, to continuously update map data and to optimise the flow of traffic and public transport. Above all, geospatial data that is updated frequently according to traffic flows significantly improves traffic safety, as information about any accidents and irregularities can be quickly conveyed to the authorities and other road users.

Falling snow presents challenges

Geospatial data can be collected automatically from the road environment by using computational methods that also help vehicles to drive themselves. Research of questions about the collection of geospatial data helps to resolve many problems associated with the performance and reliability of autonomous vehicles.

The greatest technological challenges are related to the ability of autonomous vehicles to understand and perceive their operating environment in difficult conditions, such as when it is raining or snowing or when the environment changes according to the time of the day or year. Systems must be able to operate similarly in all conditions, also in difficult ones. This sets high requirements for vehicle sensors and computational data processing methods. This is why it is very important to study the currently used methods and any unanswered questions.

Vehicles also need various senses

Autonomous vehicles must be able to understand and interpret their environment on the basis of sensor data. They should be able to get all useful information from this data in order to ensure smooth and safe travel and to collect usable geospatial data.

The ability of autonomous systems to observe can easily be compared to people’s observation skills: our ability to make observations is limited when we only use one of our senses. When we use several senses, we can obtain more reliable information about our environment. Computational methods can also obtain a more reliable understanding of the surrounding world when a number of different sensor types are used to measure physical variables by using measurements that supplement one another.

Sensor data can be merged with the help of sensor fusion into a format, in which the sensor measurements can be processed easily. The simplest and the easiest way to perform sensor fusion is to convert the sensor measurements into a single, common, coordinate system.

Vehicles must be able to interpret their environment

A single coordinate system is often used as a presentation method when the aim is to group environmental objects into different categories. Considering the road environment, key categories include cars, pedestrians, bicycles, traffic lights, traffic signs and road markings. There are various good methods available for the classification of environmental objects, but machine learning algorithms, such as neural networks, can be considered to be the most effective.

During the teaching phase, large amounts of previously collected sensor data are given to a machine learning algorithm, so that a person has already identified and marked different objects belonging to specific categories. Using this data, the algorithm then learns features, with which it can separate different categories from each other.

Most machine learning methods used for classification purposes, such as convolutional neural networks, already learn useful features from a few hundred examples prepared by people beforehand. However, it is characteristic to machine learning that the accuracy improves as the size of the data set increases. Therefore, one of the best ways to improve the accuracy and reliability of systems is to build a large and comprehensive data set to teach machine learning algorithms. This is important when considering the safety of automated driving.

Positioning must be sufficiently accurate

Using GPS satellites, the location of a vehicle can be identified with the accuracy of a few metres. This level of accuracy is fine for navigation, where the aim is to define the rough location of a vehicle in relation to traffic routes and crossings.

In terms of automated driving and the collection of high-quality geospatial data, this accuracy is not sufficient – other positioning methods are also needed. The goal is to pinpoint the location of a vehicle with an accuracy that allows the vehicle to safely remain in its lane without any risk of hitting the kerb or any obstacles. Another goal is to build a framework for high-precision geospatial data applications that require up-to-date sensor data.

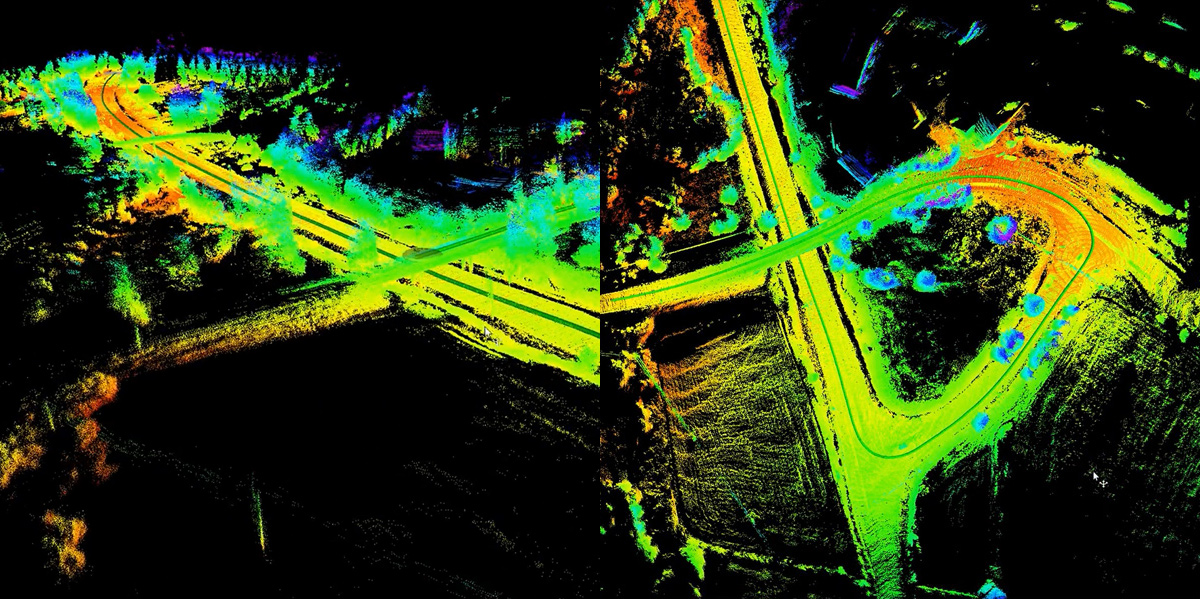

Autonomous vehicles use simultaneous localisation and mapping (SLAM) methods. It means that they simultaneously localise objects and map their environment. Data provided by a vehicle’s laser scanners, cameras and other sensors is used to simultaneously determine the relative movement of the vehicle and to build a sensor data-based map of the surrounding area.

The location of the vehicle is calculated on the basis of successive sensor observations. As a result, any measurement errors become a significant source of inaccuracy as the driving distance increases. The magnitude of a localisation error generated over time mainly depends on the sensors measurement accuracy. For example, laser scanners used on autonomous vehicles have a high accuracy, approximately a couple of centimetres over a hundred metres.

Localisation errors can be minimised by using correction algorithms and measurement models

Localisation errors can be effectively reduced by using map-based correction algorithms. As a result, the final error is almost non-existent, and satellite positioning may not be needed. SLAM methods are particularly useful to position vehicles in tunnels and in the built environment where satellite positioning suffers from weak signals.

To increase the reliability and accuracy of localisation, autonomous systems use a number of supporting positioning methods. For example, by combining satellite positioning, acceleration-based inertial navigation and SLAM, it is possible to build a positioning system that also maintains its accuracy in difficult environments.

Positioning estimates produced by independent positioning methods are often combined into a single estimate. This is done by using a mathematical measurement model that helps to minimise any errors in individual measurements.

Mathematical measurement models, such as Kalman and particle filters, build a simple theoretical model of the dynamics of the measured object. When defining the location of a vehicle, a measurement model improves the positioning estimate by including prior information, for example, about typical accelerations affecting the vehicle and any limitations to its steering geometry.

The aim of measurement models is to define an estimate of the reliability of each measurement event. Therefore, the final positioning estimate is weighed by using the reliability estimates of individual measurements. In this way, any clearly incorrect measurements can be excluded from the group of correct measurements. Using measurement models, the reliability of localisation data is also calculated. As a result, the system can be controlled to operate correctly if there is a malfunction.